Machines of the Mind: How Artificial Intelligence is Shaping Our World (Part 1: History of AI)

10 minute read - The impact of artificial intelligence (AI) is sparking intense debates, even amongst the most influential and well-renowned industry leaders and public figures, on its potential to reshape jobs, industries, and society. It’s evident that we are entering an era of unchartered territory, but are we facing a utopia of innovation or a dystopian nightmare? With each of the previous major advancements of innovation there has always been one constant: fear. Fear of what the new innovation means for jobs, society, and the future. AI is no exception.

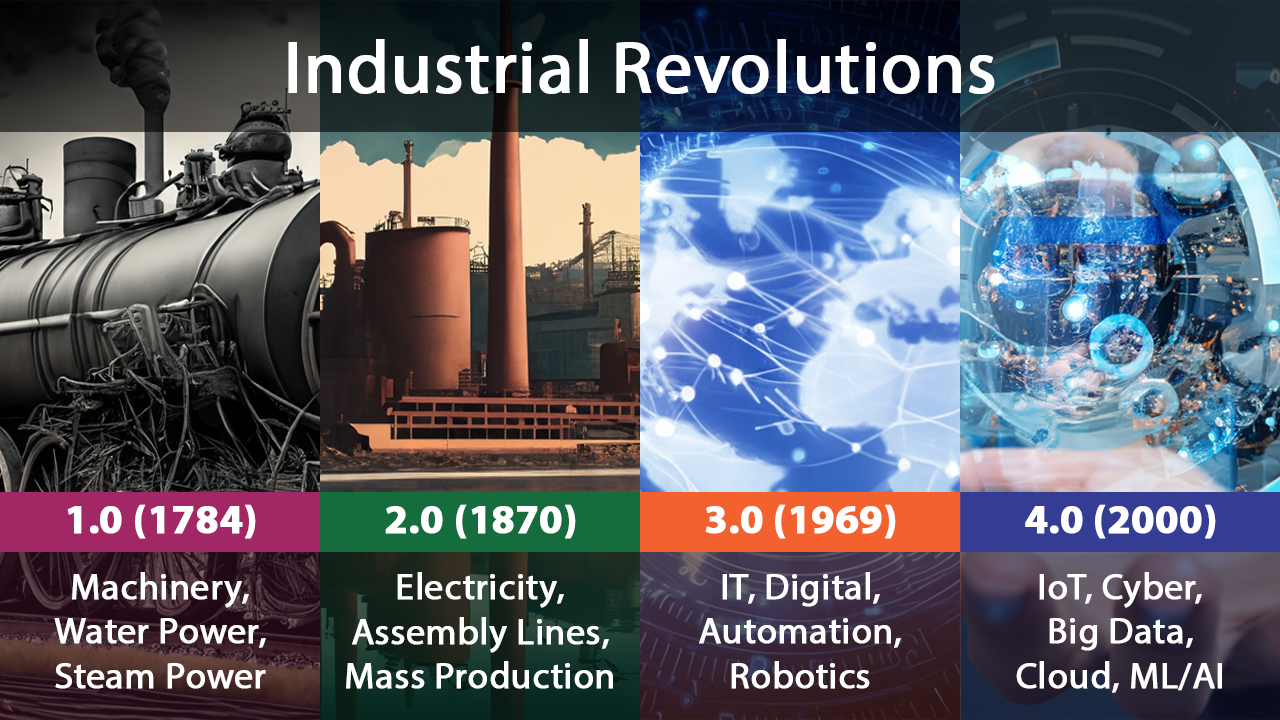

The Evolution of Revolutions

1st Industrial Revolution

Steam power, the hallmark of the 1st industrial revolution, completely transformed transport and manufacturing. Before steam power, horses were used to transport goods - an expensive, slow, and high-maintenance option. Horses had limitations of needing to rest, only carrying a certain weight, and requiring constant care to remain in good condition. Over the course of half a century, horses were replaced with more efficient machines. This shift spurred increased productivity, high wagers, and improved living standards.

2nd Industrial Revolution

The 2nd Industrial Revolution was fueled with new sources of energy: electricity, gas, and oil. This was one of the most prolific periods of change in the modern world, propelling industrial and city growth and enabling mass production. Henry Ford’s assembly line epitomised this era and in 1908, Ford’s Model T brought affordable cars to the masses - a transformation as disruptive as today’s AI-driven automation. This period saw cheaper mass production of everything from tools to weapons to transport.

3rd Industrial Revolution

The 3rd Industrial Revolution introduced digital communication, which forever altered how we transmit information, do business, and also interact with each other. New electronics allowed IT systems to automate production and handle supply chains on a global scale. Computers were no longer just tools for scientists - they became integral to everyday life, and reshaped how industries operated and how individuals connected with one another. Communication and information were as valuable as the products they enabled.

4th Industrial Revolution

The 4th Industrial Revolution started to blur the lines between humans and technology, as our devices and sensors have become extensions of who we are. Advanced biotechnology, robotics and AI are now converging, creating endless possibilities for innovation and challenging our very definitions of life and consciousness. AI, now advancing at a pace beyond human capacity, and will continue to push the boundaries of creativity and problem-solving - just like steam engines, electricity, and computers did before. But for the evolution of AI, we need to step back in time to 1950.

The Early Days of AI

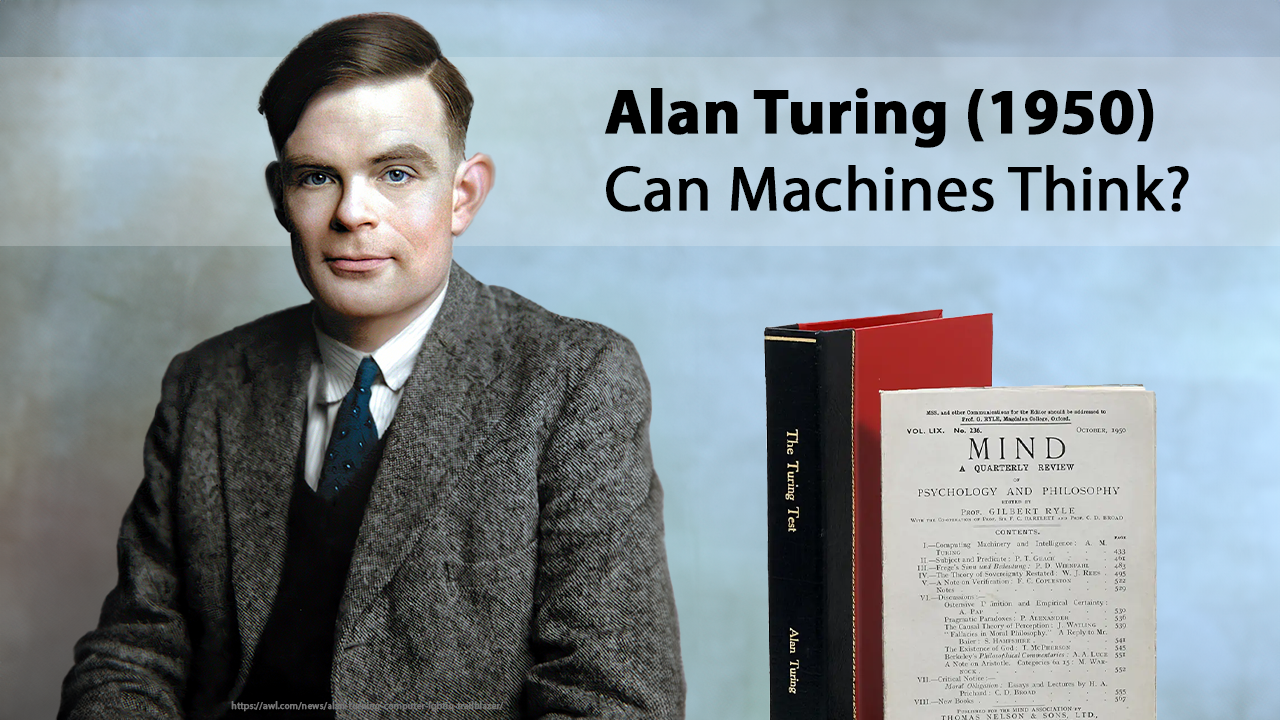

1950: Alan Turing - “Can Machines Think”

In 1950, Alan Turing, who is also known for cracking the Enigma code during WW2, famously asked the groundbreaking question: “Can machines think?”. At that time, computers were limited to performing specific tasks like calculations while humans were employed by the likes of NASA to perform more complex problems such as rocket trajectories. Turning imagined a future where machines could go far beyond following instructions and instead learn, grow, and adapt - something unheard of at the time. He believed that at some point, machines could show intelligence, or even surpass humans. Though technology at the time could not prove his theory, he devised a test he called “The Imitation Game”, now known as the “Turing Test”, whereby a human interrogator interacts with both a machine and a human, aiming to determine which responses came from the machine. If the machine successfully fools the interrogator into thinking it is human, Turing argued, we should consider that machine capable of thinking, an outcome that has caused controversy in terms of whether this actually implies intelligence.

1955: Paving the Way

As computers began to store more information and became faster, more affordable, and more accessible, Turin’s question “Can machines think?” paved the foundation of what AI is built upon today.

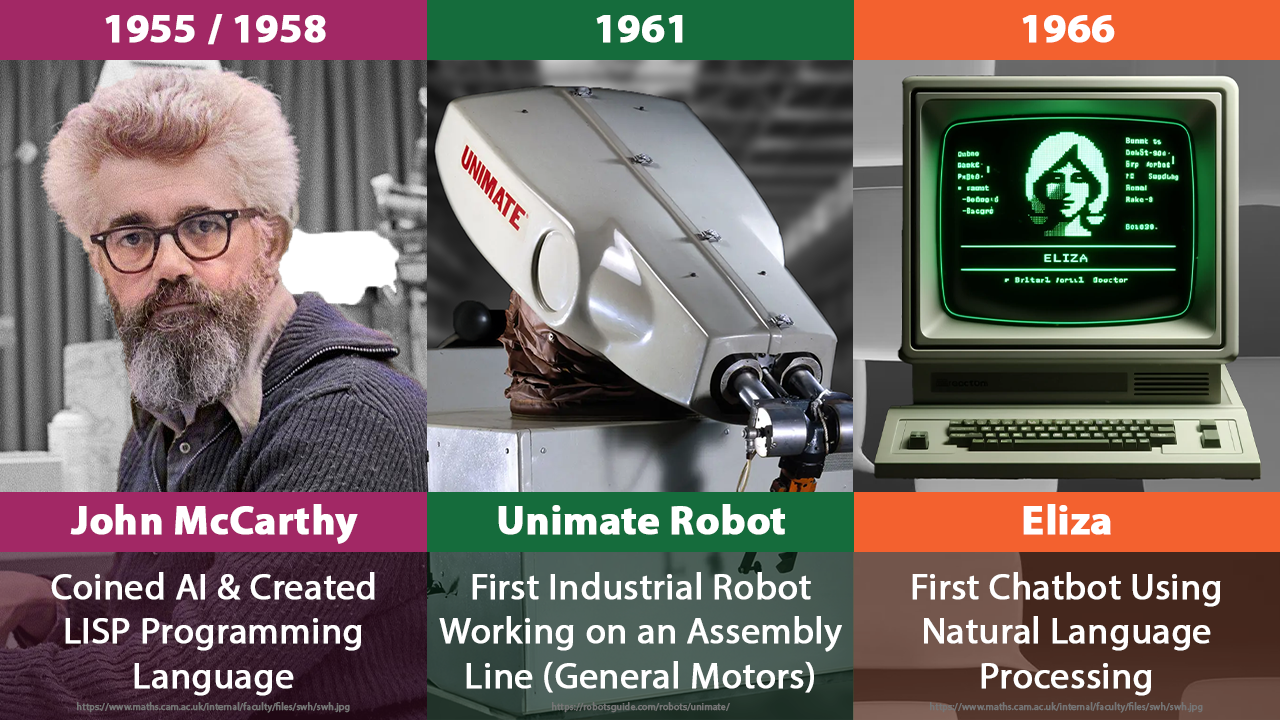

1958: John McCarthy and the Birth of AI

John McCarthy, known as the father of AI, coined the term “artificial intelligence” in 1955. In 1956, he then organised the first major AI conference to explore how machines could mimic human reasoning, problem-solving, and self-improvement. One of his most significant contributions came in 1958 when he developed LISP, the first programming language for AI. LISP remains foundational, powering modern AI from robotics to voice recognition to fraud detection.

1961: Unimate - The First Industrial Robot

In 1961, AI took another major leap with Unimate, the world’s first industrial robot. Unimate, a hydraulic manipulator, began work at General Motors, performing dangerous tasks like welding and handling die castings. Its introduction revolutionised manufacturing - robots could now work tirelessly, increasing productivity, improving product quality, and reducing labour costs. Unimate also made factories safer, taking over hazardous jobs from humans. This innovation sparked widespread adoption of automation, transforming industries far beyond car manufacturing.

1966: ELIZA - The First Chatterbot

In 1966, ELIZA, the first chatbot, simulated a psychotherapist’s conversation style, using natural language processing to engage users. Though simple by today’s standards, ELIZA raised important questions about whether machines could convincingly mimic human dialogue. Interacting through typing in questions, people often felt they were talking to a real person, marking a huge step forward in AI's conversational capabilities. Its legacy continues today, influencing modern AI assistants like Siri and Alexa.

AI Boom & Winter

During the next couple of decades, AI experienced a period of significant highs and lows.

1980-1987: The AI Boom

The AI Boom saw rapid growth and widespread optimisms. Expert Systems were developed that could analyse data and make rule-based decisions. Deep learning techniques also emerged, allowing AI to learn from its mistakes, which paved the way for the first autonomous drawing program and driverless car. Governments saw the potential in AI and began funding research, AI conferences flourished, science and engineering was a sought after skill.

1987-1993: The AI Winter

But the AI Winter was only around the corner. The high expectations from the boom were not met due to their costly development and limitations that became apparent. Governments and private investors began cutting funding, AI research slowed, and public interest faded.

Back on Track

But despite these setbacks, innovation continued in the background. By the 1990s, AI research began to bounce back. Machine learning algorithms became more efficient, laying the groundwork for integration into the healthcare and finance industries, demonstrating the ability for these technologies to transform industries and everyday life.

Key Milestones

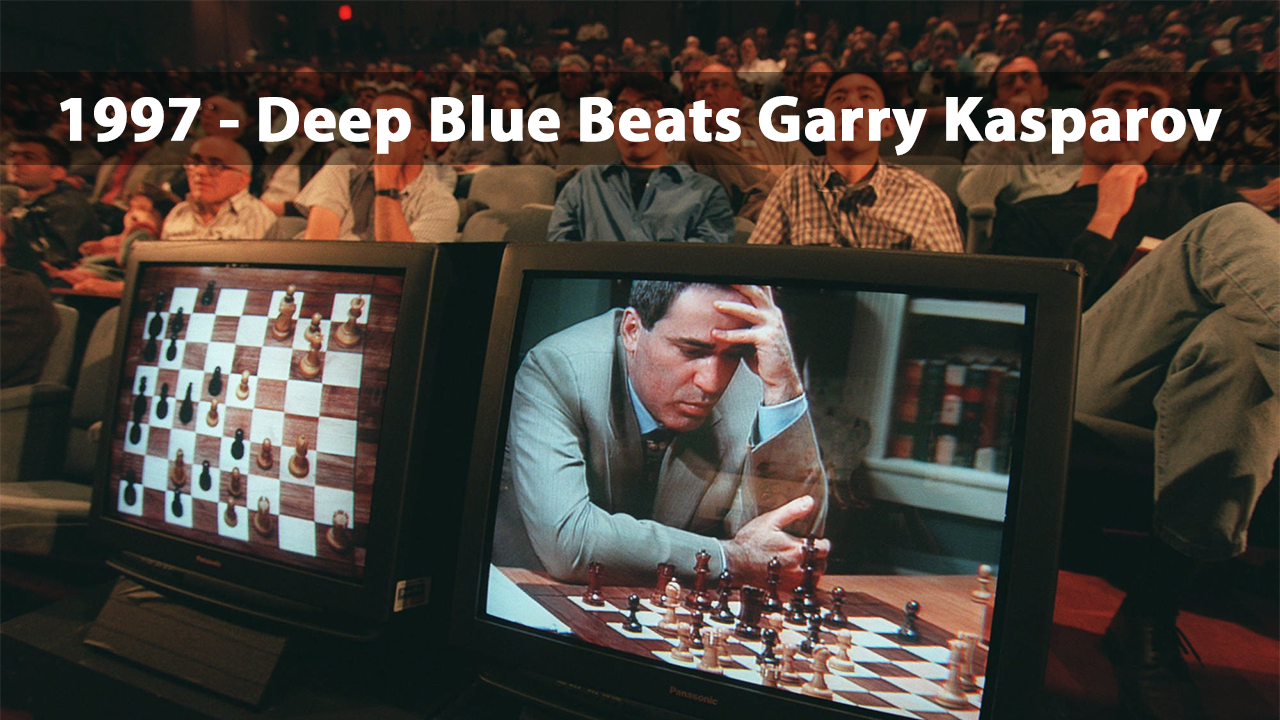

1997: Deep Blue Beats Garry Kasparov

One of the most historic moments for AI took place on a chessboard in 1997. Chess, long considered the ultimate test of human intellect, creativity, and strategy, now became the stage for a larger battle - whether AI could surpass human cognitive abilities. IBM’s Deep Blue, a supercomputer designed for chess, defeated world champion Garry Kasparov, marking the first time a computer triumphed over a reigning champion under standard tournament conditions. But this wasn’t just a chess game, the match represented a symbolic clash between human ingenuity and machine precision, captivating audiences worldwide. Deep Blue showcased the power of brute-force computing, evaluating 200 million chess positions per second, yet its victory raised questions about the nature of intelligence, as it relied on computational power rather than creativity. Nevertheless, this event paved the way for AI to enter public consciousness and symbolised the growing potential for AI to challenge human expertise across various fields.

1997: Speech Recognition

While Deep Blue was making headlines, 1997 also saw a significant breakthrough in speech recognition with Dragon Systems releasing its software for Windows. This technology enabled computers to convert spoken words into text, laying the groundwork for natural language processing. Though initially limited - requiring user training and struggling with accents, it marked the start of making machines more intuitive. Over the following years, speech recognition evolved into mainstream virtual assistants, leading to innovations like Apple's Siri, Amazon Alexa, Microsoft Cortana, and Google Assistant, fundamentally changing how we interact with technology. Today, we use virtual assistants to schedule meetings, set reminders, control smart devices, and even answer philosophical questions. This technology, now an essential part of modern life, owes its roots to the pioneering work of the late 90s.

2000: Robotic Emotion

As AI advanced into the 21st century, the focus shifted toward creating machines that could not only process data but also simulate emotions and interact with humans on a more personal level. In 2000, Professor Cynthia Breazeal from MIT developed Kismet, the first robot capable of mimicking human emotions through facial expressions. Kismet’s design enabled it to engage in emotional communication, laying the foundation for affective computing. Kismet's innovation laid the groundwork for more advanced social and emotional AI systems.

This concept evolved with the introduction of Sophia by Hanson Robotics in 2016, a humanoid robot capable of recognising and responding to human emotions. Sophia can hold conversations, express opinions, and make facial expressions that mirror human reactions. Her lifelike appearance and advanced AI allow her to interact in ways that feel almost human. Sophia became a celebrity, engaging with industries, appearing in interviews, and even addressing the United Nations, along with being granted Saudi Arabian citizenship, pushing the boundaries of human-robot interaction. Sophia represents a significant leap in human-robot interaction, raising profound questions about consciousness and emotion. As AI continues to advance, it’s clear that robots are no longer just tools - they are entities that can interact with us on a personal, emotional level, reshaping the role of machines in our lives.

2003: Robotic Navigation

The early 2000s marked impressive advancements in autonomous robotics, highlighted by NASA's successful landing of the Spirit and Opportunity rovers on Mars. Equipped with AI systems, these rovers could navigate the surface independently, analyse terrain, avoid obstacles, and make decisions without human intervention. Their success demonstrated the potential of autonomous systems in challenging environments and inspired the development of ground-based robots. These progressed with companies like Boston Dynamics creating agile robots capable of navigating complex terrains without human guidance, enhancing safety and efficiency across various industries, and ushering in an era where machines actively collaborate with humans. With each advancement, we're entering new eras where machines don’t just follow commands but work alongside us, enhancing our capabilities and pushing the boundaries of innovation.

2006: Personalised AI

In the late 2000s and early 2010s, AI began to significantly influence social media, gaming, and streaming services. By harnessing vast amounts of user data, companies started utilising AI to deliver tailored content and enhance user user experience (UX). For instance, Facebook improved its news feed algorithm to display posts most relevant to users based on their activity, while Netflix used AI to recommend shows and movies according to viewing history. This data-driven approach transformed entertainment by making platforms more engaging and completely changed the way people consume entertainment.

A key milestone came in 2010 with the launch of Microsoft’s Xbox 360 Kinect, the first gaming device to track body movement without a controller. This innovation employed AI, computer vision, and machine learning to create an immersive gaming experience. Overall, this era marked AI's transition from industrial applications to everyday consumer technology. The increasing data collection allowed these systems to learn and evolve, paving the way for more advanced AI applications to personalise, predict, and enhance experiences in the future.

2016: AlphaGo Beats Lee Sedol

In 2016, we witnessed a groundbreaking moment in AI with the creation of AlphaGo, developed by Google DeepMind. This wasn’t just another step in AI’s evolution; it was a monumental leap into a realm many believed would remain out of reach for machines for years to come. Go, an ancient board game from China, has long been considered the ultimate test for AI. Unlike chess, which has a finite number of possible moves, Go is vastly more complex, offering nearly infinite moves at any given moment.

Historically, AI struggled to master Go, despite being seen as a perfect testing ground due to its clear rules. Previous AI systems had only achieved intermediate levels of play. However, AlphaGo distinguished itself by utilising neural networks and machine learning, learning from a dataset of thousands of human games rather than relying solely on brute-force calculations. AlphaGo’s victory captivated global audiences as it highlighted AI's potential to enhance human creativity. During its victory, it displayed creative moves that Go players have since used to learn from, prompting a reevaluation of human-machine interaction, illustrating that AI could not only compete with human intelligence but also provide new insights into complex problems.

Following its success, AlphaGo evolved into AlphaGo Zero, which trained itself without human data, and then AlphaZero, which could play multiple games like chess and Shogi, showcasing AI's flexibility. These developments underscored AI's ability to exceed human understanding in certain domains, raising important discussions about the opportunities and challenges posed by such technologies. Overall, AlphaGo's triumph was a significant milestone, demonstrating AI's capacity to innovate and inspire.

Conclusion

As we reflect on the pivotal moments in AI’s development, it’s clear how instrumental these milestones were in reshaping how we interact with technology in our daily lives. The integration of AI into social media, gaming, and streaming services marked a transformative shift, enabling platforms like Facebook and Netflix to offer hyper-personalised experiences that engage users in unprecedented ways.

The groundbreaking achievement of AlphaGo in 2016 further highlighted AI's potential, showcasing its ability to learn, innovate, and even teach us new strategies in the ancient game of Go. AlphaGo not only captivated the world but also opened up discussions on AI's future implications for creativity, problem-solving, and our relationship with technology.

As we stand on the cusp of new developments in AI, it’s essential to consider both the incredible opportunities and the challenges these advancements present. From personalised experiences in our everyday apps to sophisticated AI agents that augment human intelligence, the journey of AI is far from over.

Stay tuned for Part 2, where I’ll continue exploring our current landscape with ChatGPT and other AI agents, as well as what the future has in store for us.

Until next time. I hope you enjoyed the read. GB.

Hashtags

#AI #ArtificialIntelligence #DataScience #MachineLearning #ML #DeepLearning #NeuralNetworks #Technology #Tech #HistoryOfAI #EvolutionOfAI #Data #DecisionMaking #IndustrialRevolution

References

- Alan Turing (1950) - Can Machines Think. Available at: https://awl.com/news/alan-turning-computer-lgbtiq-trailblazer/. [Accessed 10 October 2024]

- AlphaGo Beats Lee Sedol (2016). Available at: https://www.theatlantic.com/technology/archive/2016/03/the-invisible-opponent/475611/. [Accessed 10 October 2024]

- Boston Dynamics - Do You Love Me?. Available at: https://www.youtube.com/watch?v=fn3KWM1kuAw. [Accessed 10 October 2024]

- Deep Blue Beats Garry Kasparov (1997). Available at: https://www.washingtonpost.com/history/2023/05/22/garry-kasparov-chess-deep-blue-ibm/. [Accessed 10 October 2024]

- ELIZA Chatbot (1966). Available at: https://www.maths.cam.ac.uk/internal/faculty/files/swh/swh.jpg. [Accessed 10 October 2024]

- John McCarthy (1955). Available at: https://www.maths.cam.ac.uk/internal/faculty/files/swh/swh.jpg. [Accessed 10 October 2024]

- Sophia the Robot by Hanson Robots: Available at: https://www.youtube.com/watch?v=BhU9hOo5Cuc. [Accessed 10 October 2024]

- Unimate Robot (1961). Available at:

https://robotsguide.com/robots/unimate/. [Accessed 10 October 2024]