Human and AI Collaborative Intelligence

5 Minute Read - There's a lot of deliberation around whether the rise and development of full artificial intelligence will threaten human existence (more of which can be read in my article ‘Are Humans the Next Horse? The Rise of the Robots’). Whether or not this is true, only time will tell, but we can definitely say for certain that most advancements in technology will pose security risks as a result of poorly designed, misused, or hacked systems with little or no integrated regulations.

Human on the Loop

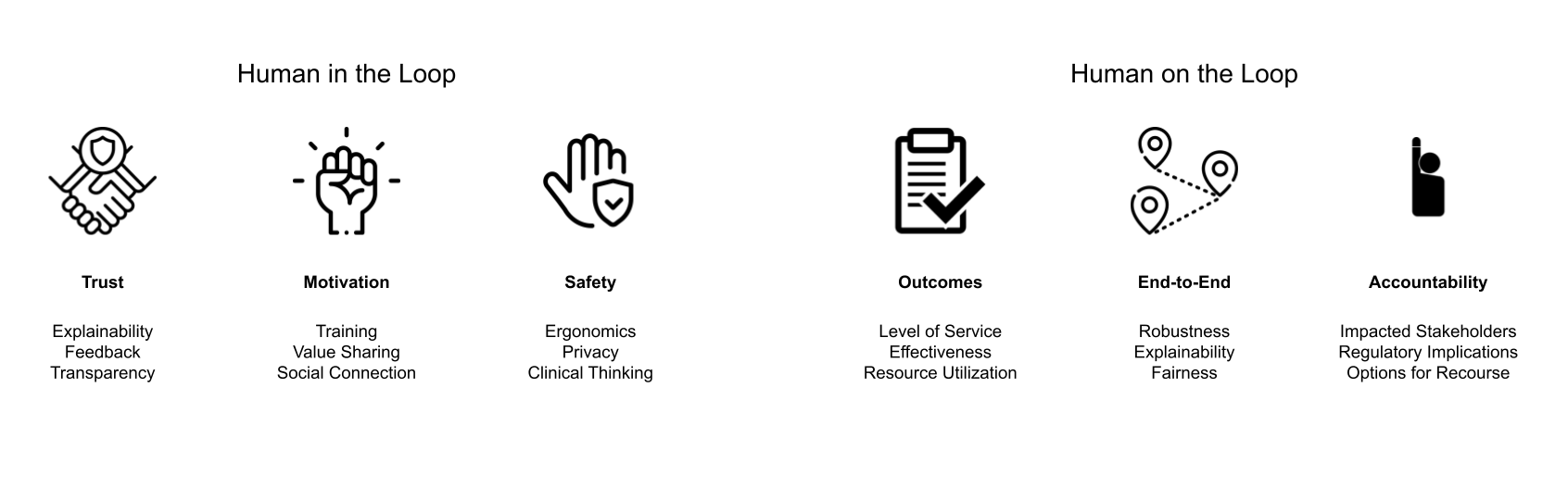

A key area that is touched upon during a lot of AI conferences and articles is the importance of ensuring a human element within the process of developing AI solutions. A healthy combination of automation and human collaboration allows for the build of trust within these systems, especially ones that are prone to change, have inherent uncertainty, require governance oversight, or have critical consequences as a result of decisions being made. One interesting consideration by Jean-François Gagné, Head of AI Product Management and Strategy at ServiceNow, is around the differences when designing either ‘human in the loop’ or ‘human on the loop’ systems.

Depending on the type of application and the expected outcomes, it is important to choose the correct type of system. In a recent defence article, Terrence J. O’Shaughnessy, commander of the United States Northern Command and of the North American Aerospace Defense Command (NORAD), stated that the military should move from a human in the loop model (where a human still has control over stopping and starting actions) to a human on the loop model (still allowing oversight, but not requiring pre-approval, and pushing human control farther from the centre of the automated decision-making (FedScoop, 2020).

Diverse and Cognitive AI

Almost all conversations around AI will eventually make its way towards discussing ethics. I have written a number of articles touching and focusing on ethical AI including 'Are Humans the Next Horse? The Rise of the Robots' and also my 3-part series starting with 'The Rise of Ethics in Artificial Intelligence (Part 1: Privacy)'. There are a number of important factors that need to be considered when building within a collaborative environment:

- Leadership: When good leadership is in place, almost anything is achievable. Leadership sets the example, which should then be followed by having a combination of complimentary product, technology, and compliance teams at the table for decision making.

- Partnerships: When at the cutting-edge of technology, it is crucial to know how to look for opportunities to build relationships and form partnerships by having dynamic links with technology, industry, and regulatory frameworks.

- Ethics-Aware: It’s not enough just to teach ethics, you need to teach people how to be ethical. This process should be embedded not only into the technology teams, but across the entire company. This ground-up awareness ensures that there is alignment across all aspects of the product life-cycle towards developing secure, transparent, and connected products.

- Users: When building products, you need to consider the societal and generational balance to understand how users will interact and use the applications. User experience design (UX) should be at the heart of the products and act as an extension to the customers, enabling them to part of the creation journey and see the benefits from the starting position of understanding risk and usage.

Final Thoughts

In a recent article, a Google engineer stated the company's AI has become sentient (CBC News, 2022). The sentient debate touches on technology, ethics, and philosophy in the understanding of what it means to be alive and whether we will ever fully know if AI has gained consciousness. Either way, there is a huge amount of progress that needs to be made before AI can be fully autonomous - if it ever will be, and will require a huge amount of human effort to shadow it along the way. Do you ever think AI will become fully sentient? Until next time, I hope you enjoyed the read. GB.

Hashtags

#AI #ArtificialIntelligence #DataScience #MachineLearning #ML #Trustworthy #Ethics #Policy #Regulations #Governance #Data #DecisionMaking #DocumentIntelligence #DueDiligence #Compliance

References

- CBC News. (2022). 'A Google engineer says AI has become sentient. What does that actually mean?'. Available at: https://www.cbc.ca/news/science/ai-consciousness-how-to-recognize-1.6498068. [Accessed 10 July 2022]

- FedScoop. (2020). ‘AI needs humans ‘on the loop’ not ‘in the loop’ for nuke detection, general says’. Available at: https://www.fedscoop.com/ai-should-have-human-on-the-loop-not-in-the-loop-when-it-comes-to-nuke-detection-general-says/. [Accessed on 13 December 2021]

- Figure Cover. (2020). The Conversation. [online] Available at: https://theconversation.com/will-ai-take-over-quantum-theory-suggests-otherwise-126567. [Accessed 13 December 2021]

- Gagné, Jean-François. (2021). 'Designing for the Humans in the Loop – Part III'. Available at:

https://jfgagne.ai/blog/designing-for-the-humans-in-the-loop-part-iii. [Accessed 15 December 2021]