The Rise of Ethics in Artificial Intelligence (Part 1: Privacy)

8 minute read

As artificial intelligence (AI) is starting to consume our lives, ethics has never been more important than it is today. We are moving towards an era, where for the first time, experts are contradicting each other towards the uncertainty of our future. Considerations around ethics need to be at the forefront of the brightest minds who will be developing the algorithms and machines of the future. In the next three articles, I will take a snapshot look at the rise of ethics – starting with privacy and then followed by content ownership, the implications of AI, what is being done to ensure trustworthy AI, and some interesting areas to keep up to date with. All thoughts, views and comments are my own.

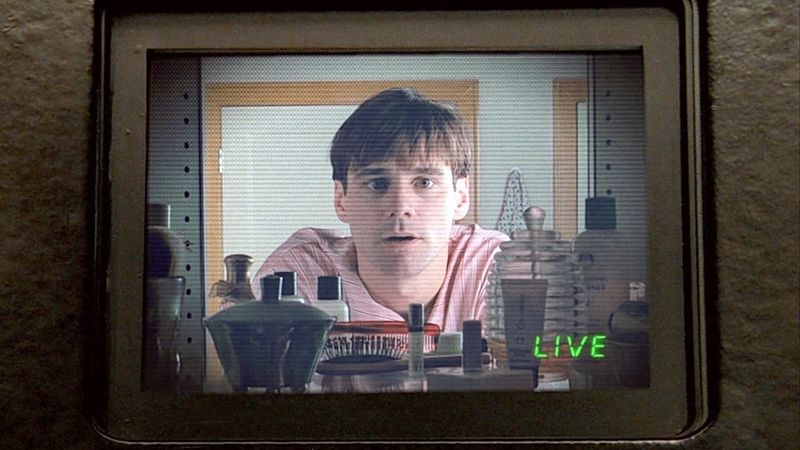

The Truman Effect

The very first breath of today’s generation is almost acceptance of the terms that our face will be immediately photographed and presented to the social realms for those all exclusive ‘likes’. We’re pretty much born without a decision, but does it get any better? As we roam the streets, do our shopping, drive our cars, or pop into our neighbours for a cup of coffee, our faces are becoming increasingly captured and recognised by surveillance cameras. How many times would you think, on average, you are caught on surveillance cameras per day? Well in the United States, it is estimated that the average citizen is caught up to 75 times per day on surveillances cameras, whereas in London, it is estimated that the average person is caught up to 300 times per day (Reolink, 2018). One can argue that these cameras are evading our privacy whereas others will argue that these are keeping us safe – either way, we need to look for ways that maintain our privacy whilst also keeping us safe.

Figure 1: The Truman Show

I'm a Good Citizen

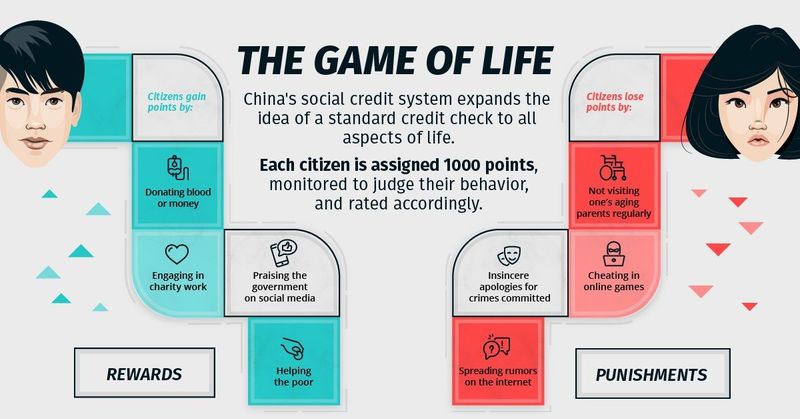

In one of my previous articles “We Are At War... A Cyber War; With 20 Billion Connected Devices. Just How Safe Do You Feel?”, I discussed how, in China’s move towards a cashless economy, they had reached almost $13 trillion in mobile transactions in 2017, which has increased recently to $41.51 trillion in 2018 (China Daily, 2019). More and more of our life’s are becoming digitalised, so much so, that China have recently starting scoring a citizens ‘social credit’ based on whether they have committed any minor offenses. In 2018, this ‘trustworthy’ scoring (Independent, 2016) stopped 17.5 million people from buying airplane tickets, 5.5 million from hopping on a train, 290,000 people from getting a high-paying senior management job, and 128 from leaving the country because they had not yet paid their taxes (Fortune, 2019). The concept of this is extremely interesting, but do you think it is a good idea? Will the result of this force/encourage people to be better citizens or is it placing too much power in the government’s hands? What sort of catastrophes could be caused if there was a data breach – could a person be essentially removed from ever existing?

Figure 2: China’s Social Credit System

How Did You Know I Would Like That?

Is this familiar… you are browsing online for a product, and the next day you are on a completely different website and all of a sudden you notice an advert that is displaying a similar product that you were looking at previously? Is this just a lucky coincidence, or is it that your browsing profile and data been shared across different sites? The sharing of our personal data is being used to drive extremely personalised campaigns and customised services, but where do we draw the line between the best digital experience vs. violating our privacy?

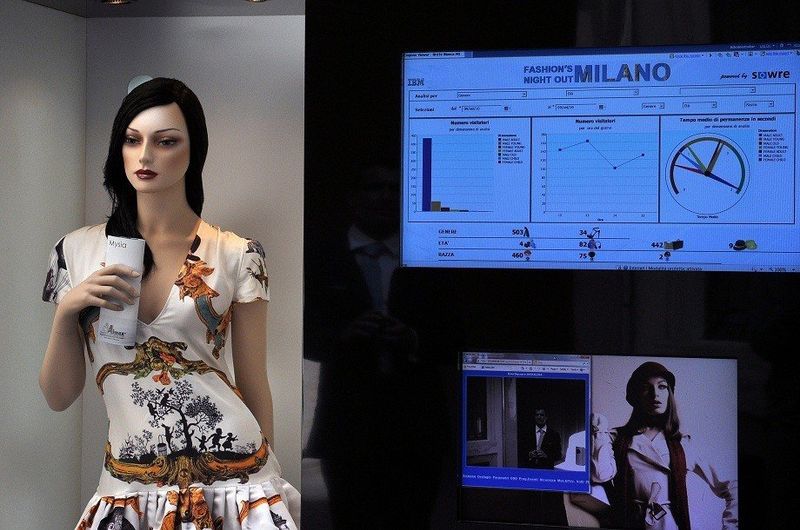

Now take this digital experience to your physical stores. EyeSee mannequins, that use embedded cameras to determine your gender, race, age, and facial expressions in order to understand whether you like what you are looking at, are now being deployed in stores (Techno Fashion World, 2013). This information combined with location information from your mobile device could potentially be used to establish whether you made a purchase. This powerful information can be used to determine how to rearrange a shop, what clothes to put together, and also what highly personalised marketing to send afterwards. So the next time you look a mannequin and think, “Oh I like those clothes”, have a little think and wonder is the mannequin thinking the same about you. She may not be as lively as Kim Cattrall, but she could be watching you.

Figure 3: Eye See Mannequin

Terms and Conditions May Apply

How many of us actually take time to read the terms and conditions before we accept them? A recent survey by Deloitte found that 91% of people consent to legal terms and conditions without reading them, which increases to 97% of people when considering the ages between 18-34 (Business Insider, 2017). There’s a good example of this from PC Pitstop, who hid a $1000 prize in their terms and conditions and all the reader had to do was email them. It wasn’t until 5 months and 3,000 sales later that a user actually noticed it, emailed the team, and claimed the prize (TheUIJunkie, 2012).

Companies such as Facebook and Google have been criticised for having long-winded, misguiding, and unfriendly language within their terms and conditions. A recent study found that terms and conditions belong to some of the most commonly used websites and applications are almost unreadable to 99% of people, with some being compared to academic journals requiring over 14 years of education to understand (Vice 2019). This is proof that tech companies are making it harder and harder to use their services without giving up your personal information and data.

In the documentary ‘Terms and Conditions May Apply’, it was said that Google is essentially a $500 a year service, because that’s the value that is placed on the data that you provide. More and more companies are now gathering these types of data points and learning about our interests, fears, secrets, family, and friends… and we pretty much agree to the majority of it (IMDb, 2013). One of the biggest potential issues is that this data could be used as an input to an automated flagging system based on your search and online behaviours, a similar concept to that of the film Minority Report, and there has been some examples of this in the past. In 2012, two young tourists were refused entry to the United States on the security grounds that he previously tweeted saying he was going to “destroy America” – by which he meant ‘get drunk’... millennials huh (BBC News, 2012). In 2009, New York comedian Joe Lipari, ended up with two felony charges and spent a year proving he was not a terrorist after he was unhappy with the assistance he was given at an Apple store and paraphrased a quote from the film Fight Club on Facebook (Engadget, 2011).

Figure 4: Terms and Conditions May Apply

Final Thoughts

In 2011, Marc Andreessen stated that software is eating the world and in order to survive, every company must be a software company (A16z, 2011). It is almost inevitable that the convenience and enjoyment that our connection to the plethora of connected devices and application bring us, lead to the intrusiveness to our behaviours and patterns. Although the sharing of these types of information does come with advantages such as the accuracy and efficiency of Google Maps (which is said to have 20 million user contributions of information every day – that’s more than 200 contributions every second [Geospatial World, 2019]), is the tailoring of services based on your purchasing patterns and behaviours really that bad? Or is it the randomness that enriches our lives? In the casual flow of life, if we never get a chance to partake in the art of browsing and are denied the opportunity to stumble upon something new, has our freedom been taken from us… our freedom of choice? We are now at a stage where almost every company is a data company. Data has become king and it looks like we must learn to live in the kingdom. In my next article 'The Rise of Ethics in Artificial Intelligence (Part 2: Content Ownership)', I will talk about some areas in which AI is generating content, and the implications that this has in terms of ownership.

Until next time, I hope you enjoyed the read. GB

References

- BBC News. (2012). Caution on Twitter urged as tourists barred from US. [online] Available at: https://www.bbc.com/news/technology-16810312. (Accessed 29 November 2019).

- Business Insider. (2017). You're not alone, no one reads terms of service agreements. [online] Available at: https://www.businessinsider.com/deloitte-study-91-percent-agree-terms-of-service-without-reading-2017-11. (Accessed 24 November 2019).

- China Daily. (2019). Mobile payments continue meteoric rise. [online] Available at: https://www.chinadaily.com.cn/a/201903/21/WS5c932294a3104842260b1cc9.html. (Accessed 09 November 2019).

- Engadget. (2011). First rule of Facebook: Don't quote Fight Club. [online] Available at: https://www.engadget.com/2011/06/28/first-rule-of-facebook-dont-quote-fight-club/. (Accessed 11 January 2020).

- Figure 0: Cover. [online] Available at: https://unsplash.com/photos/IhcSHrZXFs4.

- Figure 1: The Truman Show. [online] Available at: https://www.dazeddigital.com/film-tv/article/40216/1/the-original-truman-show-screenplay-was-way-way-darker. (Accessed 09 November 2019).

- Figure 2: China’s Social Credit System. [online] Available at: https://www.visualcapitalist.com/the-game-of-life-visualizing-chinas-social-credit-system/. (Accessed 09 November 2019).

- Figure 3: Eye See Mannequin. [online] Available at: http://www.technofashionworld.com/eye-see-mannequin-the-smart-and-well-behaved-mannequin/. (Accessed 09 November 2019).

- Figure 4: Terms and Conditions May Apply. [online] Available at: https://images-na.ssl-images-amazon.com/images/I/81pcQLUXs6L._RI_.jpg. (Accessed 11 January 2020).

- Fortune. (2019). China Banned 23 Million People From Traveling Last Year for Poor ‘Social Credit’ Scores. [online] Available at: https://fortune.com/2019/02/22/china-social-credit-travel-ban/. (Accessed 09 November 2019).

- Geospatial World. (2019). Most asked questions about the way Google Maps uses data. [online] Available at: https://www.geospatialworld.net/blogs/most-asked-questions-about-the-way-google-maps-uses-data/. (Accessed 12 January 2020).

- IMDb. (2013). Terms and Conditions May Apply. [online] Available at: https://www.imdb.com/title/tt2084953/. (Accessed 24 November 2019).

- Independent. (2016). China wants to give all of its citizens a score – and their rating could affect every area of their lives. [online] Available at: https://www.independent.co.uk/news/world/asia/china-surveillance-big-data-score-censorship-a7375221.html. (Accessed 09 November 2019).

- Reolink. (2018). How Many Times Are You Caught on Security Camera per Day. [online] Available at: https://reolink.com/how-many-times-you-caught-on-camera-per-day/. (Accessed 09 November 2019).

- Techno Fashion World. (2013). Eye See Mannequin, the Smart and well-behaved mannequin. [online] Available at: http://www.technofashionworld.com/eye-see-mannequin-the-smart-and-well-behaved-mannequin/. (Accessed 09 November 2019).

- TheUIJunkie. (2012). The Company That Gave $1,000 To Anyone Who Had Read Their Terms Of Service. [online] Available at: https://theuijunkie.com/pc-pitstop-prize/. (Accessed 11 January 2020).

- Vice. (2019). Most Online ‘Terms of Service’ Are Incomprehensible to Adults, Study Finds. [online] Available at: https://www.vice.com/en_us/article/xwbg7j/online-contract-terms-of-service-are-incomprehensible-to-adults-study-finds. (Accessed 24 November 2019).