The Rise of Ethics in Artificial Intelligence (Part 3: Trustworthy AI)

8 minute read

In my previous article ‘The Rise of Ethics in Artificial Intelligence (Part 2: Content Ownership)’, I highlighted some interesting stories that blur the lines between technology and humanity. In this final article of the series, I will outline what some of the industry leaders are doing in order to ensure trustworthy AI, and also some interesting areas to keep an eye on. All thoughts, views and comments are my own.

The Ruler of the World

In 2018, at a Special Town Hall Event with Google and YouTube (Eventbrite, 2018), Google CEO Sundar Pichai said that “AI will have a bigger impact on the world that some of the most ubiquitous innovations in history” and that it is “more profound than electricity or fire” (Cranz, 2018). The uncertainty around the exact ‘impact’ and ‘profoundness’ of which is under constant debate amongst industry leaders and public figures. Some of the predictions of AI taking over the world, creating a new wave of cyberattacks, superhuman hacking, and even autonomous weapon systems, sound like a dystopian nightmare taken straight from an episode of Black Mirror. In September 2017, Russian President Vladimir Putin said that AI will create “colossal opportunities, but also threats that are difficult to predict” and also whoever becomes the leader in artificial intelligence “will become the ruler of the world” (James, 2017). Elon Musk replied on Twitter to these comments by stating that the “competition for AI superiority at national level will most likely be the cause of WW3” (Lant, 2017). And even the great theoretical physicist Stephen Hawking has advised creators of AI to “employ best practice and effective management” as the “success in creating effective AI, could be the biggest event in the history of our civilization. Or the worst. We just don’t know” (Kharpal, 2017). ‘We just don’t know’ sums up the uncertainty that surrounds the collective thoughts as we move towards the inevitable progression of AI, but now let us look at some of the safe guards that are being put in place to try and ensure that AI is created for good and not evil.

Figure 1: AI Army

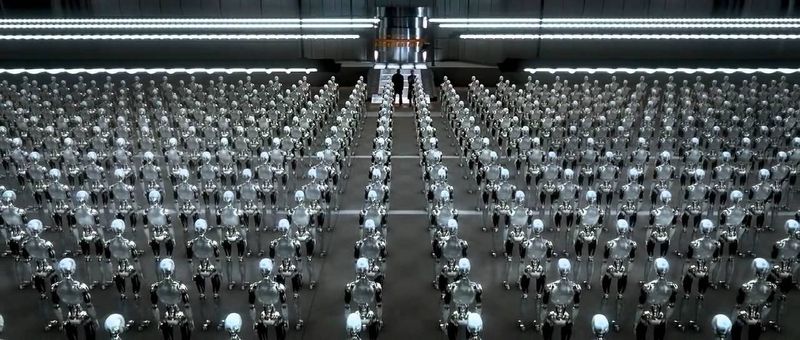

The Ethics of Trustworthy AI

The rapid rise of AI and machine learning has led to growing calls to examine its impact on society, with experts warning of the technology's potential misuse if it isn't controlled.

Strategy

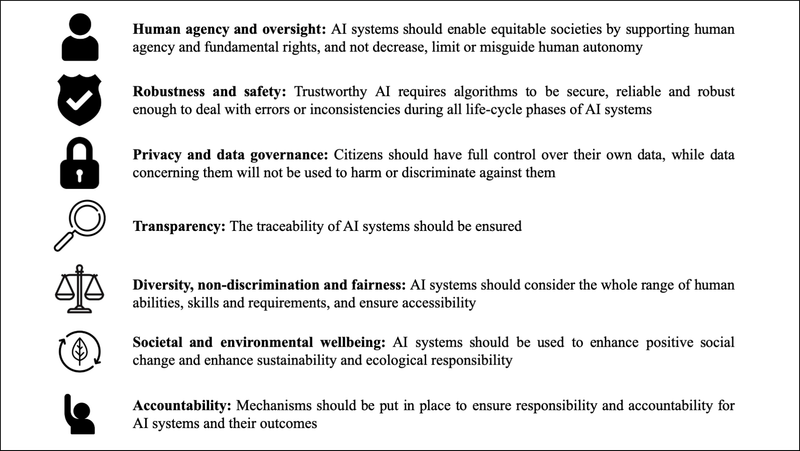

One of the ways in which this is being examined is by the High-Level Expert Group on AI (HLEG-AI). This independent expert group was created by the European Commission in 2018 to define a European strategy on AI and focus on defining human centred policies around ethical, legal, and societal issues. Towards the end of 2018, this group released the first draft of its ‘Ethics Guidelines for Trustworthy Artificial Intelligence’, which was then published in April 2019 (European Commission, 2019a). Part of these outlined the following seven essentials for achieving trustworthy AI.

Figure 2: Ethics Guidelines for Trustworthy AI

Complimenting this document, the group followed up in June 2019 by publishing the ‘Policy and Investment Recommendations for Trustworthy Artificial Intelligence’ (European Commission, 2019b). Professor Barry O’Sullivan, who is the Vice Chair of the HLEG-AI, President of the European Artificial Intelligence Association (EurAI), and also founding Director of the Insight Centre for Data Analytics at UCC, is holding a Trustworthy AI Event this coming Wednesday 19th February at the Aviva Stadium, Dublin (Eventbrite, 2020), aimed at discussing these recent developments and guidelines related to trustworthy AI and also the emerging regulations to come, more of which can be read in the recent Silicon Republic article, ‘Trustworthy artificial intelligence – is new EU regulation coming for AI?’ (Silicon Republic, 2020).

Research

In addition to expert groups looking into these ethical guidelines, a number of advancements in research has also been underway. In 2018, the 82nd richest person in world Stephen Schwarzman, donated $350 million to Massachusetts Institute of Technology to set up the MIT Schwarzman College of Computing to focus on the opportunities, challenges, and ethical and policy implications by the rise of AI. In June 2019, he then donated $188 million to the University of Oxford, their largest single donation in hundreds of years, to help fund the research into studying the ethical implications of AI. Schwarzman stated, “What motivates me, among other things, is to have the core of humanities, the basic values of people, be considered in the context of technological development. Technology left unaffected would trample over certain aspects of human behaviour and human opportunities” (Carpani, 2019). Tim Berners-Lee, the inventor of the world wide web, voiced his support for Schwarzman's donation to Oxford: "It is essential that philosophy and ethics engages with those disciplines developing and using AI" he said in a statement shared by the university. "If AI is to benefit humanity we must understand its moral and ethical implications" he added (Rishi, 2019).

Future Trends

The following are just some interesting areas that I feel will be worth keeping an eye on as we move towards ensuring AI ethics is at the forefront of the creation of AI applications. I may write an article going into more future trends and further expanding the areas below.

Biometrics

We are all becoming more familiar with biometrics due to the advancement of technology in our mobile devices. It is estimated that the value of the global biometric market will grow from $33 billion to $65.3 billion by 2024 (Markets and Markets, 2018). Both government and business are starting to adopt this technology at an alarming rate. But is this progress or an invasion of our privacy and erosion of our freedom?

Identification: In 2016, Saudi Arabia enforced new regulations that required all telecommunication subscribers to register their fingerprints (Arab News, 2016). Also in 2016, both Hungary and Turkey started distributing biometric identity cards that contains a chip that can store peoples fingerprints, electronic signature, social security, and tax information (Mayhew, 2016a)(Mayhew, 2016b). In 2017, Pakistan introduced biometric passports to reduce the risk of forgery and also human trafficking by authenticating the identity of each traveller (Tribune, 2016). In 2018, India decided to continue with the world’s largest biometric identification program with almost the entire population of India’s (1 billion people) name, gender, date of birth, fingerprints, iris scans, and photos linked to a 12 digit number and registration card that they can use for a range of government services, banking and telephony (Ayyar, 2018).

Authentication: In 2017, Dubai airport announced that they will now use a virtual aquarium styled tunnel, fitted with 80 cameras, to scan passengers faces as they walk through security clearance (Ong, 2017). Last year, Barclays in collaboration with Hitachi, developed a finger vein scanner that uses infra-red technology to identify specific vein patterns in an attempt to further secure transactions (Barclays, 2019).

Robotic Responsibility

In one of my previous articles ‘Are Humans the Next Horse? The Rise of the Robots’, I discussed the impact of automation on the workforce. But what could this mean from a society point of view?

Robot Tax: It’s clear that no one knows for certain the exact impact that AI will have on the workforce; but inevitably, certain tasks will be automated. But how will the displaced workforce find meaning if their careers have come to a premature conclusion? And also what will happen if companies simply no longer need human workers? One idea that Bill Gates has suggested is that robots should be taxed. Not only would this give society time to adjust to the adoption of this technology, but it could also pay for further education opportunities and employment in soft-skilled careers. Now this needs a lot of consideration, with some of the advantages and disadvantages of the approach outlined quite nicely in the Emerj article ‘Robot Tax – A Summary of Arguments For and Against’ (Walker, 2019).

Citizenship: In 2017, Sophia the Robot from Hanson Robotics was famously given citizenship of Saudi Arabia (Stone, 2017). This is a really interesting concept that has caused a lot of controversy. Regardless of how woke Sophia has been programmed to be, does she deserve the acknowledgement to the full set of citizen rights?

Final Thoughts

It almost feels like every day there is a new advancement in technology. Companies are fighting to be the first to conquer in the discovery of AI dominancy. Some of the areas above outline how we are best trying to put guide-rails in place to ensure that this technology is used to compliment and augment the way we live in a positive manner.

With Sophia, is the prioritisation of technological utopia over the rights and lives of humans the right thing to do? Does this give the permission towards the removal of human responsibility should something go wrong? There’s a lot of questions to answer here to understand which direction we are heading. We are constantly pushing the boundaries between technology and human interaction, a boundary that is becoming thinner and thinner. But is it possible to control the genie once it is out of the bottle? Should we hold onto our 3 wishes just in case?

Until next time, I hope you enjoyed the read. GB

References

- Arab News. (2016). Fingerprint to be recorded for issuance of mobile SIM. [online] Available at: https://www.arabnews.com/saudi-arabia/news/870691. (Accessed 12 February 2020).

- Ayyar, Kamakshi. (2018). The World's Largest Biometric Identification System Survived a Supreme Court Challenge in India. [online] Available at: https://time.com/5388257/india-aadhaar-biometric-identification/. (Accessed 12 February 2020).

- Barclays. (2019). Barclays and Hitachi launch next-generation finger vein scanner. [online] Available at: https://home.barclays/news/press-releases/2019/11/barclays-and-hitachi-launch-next-generation-finger-vein-scanner/. (Accessed 17 Februrary 2020).

- Carpani, Jessica. (2019). Oxford University given £150m by US billionaire to investigate AI in biggest ever donation. [online] Available at: https://www.telegraph.co.uk/news/2019/06/19/oxford-university-given-150m-us-billionaire-found-ai-institute/. (Accessed 12 February 2020).

- Cranz, Alex. (2018). Google CEO Says AI Is 'More Profound Than, I Dunno, Electricity or Fire'. [online] Available at: https://gizmodo.com/google-ceo-says-ai-is-more-profound-than-i-dunno-elec-1822635900. (Accessed 5 February 2020).

- European Commission. (2019a). Ethics Guidelines for Trustworthy AI. [online] Available at: https://ec.europa.eu/futurium/en/ai-alliance-consultation/guidelines. (Accessed 5 February 2020).

- European Commission. (2019b). Policy and investment recommendations for trustworthy Artificial Intelligence. [online] Available at: https://ec.europa.eu/digital-single-market/en/news/policy-and-investment-recommendations-trustworthy-artificial-intelligence. (Accessed 12 February 2020).

- Eventbrite. (2018). A Special Town Hall Event with Google and YouTube. [online] Available at: https://www.eventbrite.com/e/a-special-town-hall-event-with-google-and-youtube-tickets-41722062813#. (Accessed 5 February 2020).

- Eventbrite. (2020). Trustworthy AI Event, Aviva Stadium. [online] Available at: https://www.eventbrite.ie/e/trustworthy-ai-event-aviva-stadium-entrance-a-tickets-89767461895?aff=ebdssbeac. (Accessed 12 February 2020).

- Figure 0: Cover. [online] Available at: https://miro.medium.com/max/7680/1*Vx3iseHaMpM6156T8vx3Dg.jpeg

- Figure 1: AI Army. [online] Available at: https://i.pinimg.com/originals/8c/90/9d/8c909dc7ee5327ad5a18912f8c1e2865.jpg

- Figure 2: Ethics Guidelines.

- Gorey, Colm. (2019). EU reveals its 7 essentials for achieving trustworthy AI. [online] Available at: https://www.siliconrepublic.com/machines/ai-ethics-eu-guidelines-2019. (Accessed 5 February 2020).

- Iyengar, Rishi. (2019). Stephen Schwarzman gives $188 million to Oxford to research AI ethics. [online] Available at: https://edition.cnn.com/2019/06/19/tech/stephen-schwarzman-oxford-ai-donation/index.html. (Accessed 13 February 2020).

- Kharpal, Arjun. (2017). Stephen Hawking says A.I. could be ‘worst event in the history of our civilization’. [online] Available at: https://www.cnbc.com/2017/11/06/stephen-hawking-ai-could-be-worst-event-in-civilization.html. (Accessed 5 February 2020).

- Lant, Karla. (2017). Elon Musk: Competition for AI Superiority at National Level Will Be the “Most Likely Cause of WW3”. [online] Available at: https://futurism.com/elon-musk-competition-for-ai-superiority-at-national-level-will-be-the-most-likely-cause-of-ww3. (Accessed 5 February 2020).

- Markets and Markets. (2018). Biometric System Market by Authentication Type (Single-Factor: Fingerprint, Iris, Palm Print, Face, Voice; Multi-Factor), Offering (Hardware, Software), Functionality (Contact, Noncontact, Combined), End User, and Region - Global Forecast to 2024. [online] Available at: https://www.marketsandmarkets.com/Market-Reports/next-generation-biometric-technologies-market-697.html?gclid=CjwKCAiA4Y7yBRB8EiwADV1haSyazciY7-Q_PNNWkAjSlJT1WZQ2-W3CrfYrHm_lyLFRqnPej9WpiBoCp30QAvD_BwE. (Accessed 12 February 2020).

- Mayhew, Stephen. (2016a). Hungary begins issuing biometric id cards. [online] Available at: https://www.biometricupdate.com/201601/hungary-begins-issuing-biometric-id-cards. (Accessed 12 February 2020).

- Mayhew, Stephen. (2016b). Turkey starts distributing biometric identity cards. [online] Available at: https://www.biometricupdate.com/201603/turkey-starts-distributing-biometric-identity-cards. (Accessed 12 February 2020).

- Ong, Thuy. (2017). Dubai Airport is going to use face-scanning virtual aquariums as security checkpoints. [online] Available at: https://www.theverge.com/2017/10/10/16451842/dubai-airport-face-recognition-virtual-aquarium. (Accessed 17 February 2020).

- Silicon Republic. (2020). Trustworthy artificial intelligence – is new EU regulation coming for AI? [online] Available at: https://www.siliconrepublic.com/machines/trustworthy-ai-eu-regulation. (Accessed 16 February 2020).

- Stone, Zara. (2017). Everything You Need To Know About Sophia, The World's First Robot Citizen. [online] Available at: https://www.forbes.com/sites/zarastone/2017/11/07/everything-you-need-to-know-about-sophia-the-worlds-first-robot-citizen/#1112e71446fa. (Accessed 17 February 2020).

- Tribune. (2016). Biometric passports to be introduced in 2017. [online] Available at: https://tribune.com.pk/story/1103021/biometric-passports-to-be-introduced-in-2017/. (Accessed 12 February 2020).

- Vincent, James. (2017). Putin says the nation that leads in AI ‘will be the ruler of the world’. [online] Available at: https://www.theverge.com/2017/9/4/16251226/russia-ai-putin-rule-the-world. (Accessed 5 February 2020).

- Walker, Jon. (2019). Robot Tax – A Summary of Arguments “For” and “Against”. [online] Available at: https://emerj.com/ai-sector-overviews/robot-tax-summary-arguments/. (Accessed 17 February 2020).